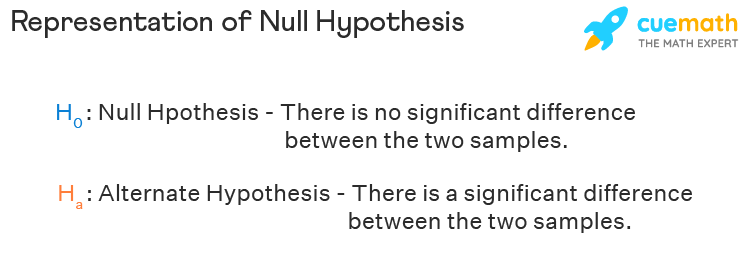

In the realm of statistics, the concept of the null hypothesis serves as a cornerstone for empirical inquiry. It is a statement that posits no significant effect or relationship between variables in a given study. This foundational idea frames the research process, guiding scholars to either reject or fail to reject its significance. But what does this mean in practical terms? Let’s delve into a captivating example of a null hypothesis, its implications, and the underlying reasons for the intrigue surrounding this pivotal concept.

Consider a scenario that resonates with many: the notion that drinking coffee might improve cognitive performance. Countless individuals often believe this daily ritual enhances their productivity and mental acuity. However, to approach this bias scientifically, one might formulate a null hypothesis: “There is no difference in cognitive performance between individuals who consume coffee and those who do not.” This hypothesis sets the groundwork for experimentation, paving the way for systematic observation and statistical testing.

To explore this hypothesis, researchers might conduct a study involving two groups of participants—one that drinks coffee and another that abstains. Through meticulous design, they would measure various cognitive abilities, perhaps through memory tests or problem-solving tasks. The data collected would then be analyzed using statistical methods to either corroborate or challenge the null hypothesis. If the results indicate no significant difference in performance, the null hypothesis stands, reinforcing the idea that coffee consumption may not possess the superhuman qualities its enthusiasts attribute to it.

Yet, the implications of this study extend beyond mere coffee consumption. The null hypothesis is often perceived as a gatekeeper of truth in scientific exploration. It serves as a bulwark against the cognitive biases that can distort our perception of reality. By positing a default position of skepticism—essentially, believing these claims to be false until proven otherwise—researchers ensure that only rigorous evidence can shift the pendulum of understanding.

This tendency to confront preconceived notions is an aspect of human nature that many find fascinating. It compels us to question the norm and explore deeper reasons behind our everyday experiences. Perhaps this curiosity explains the widespread interest in what might seem like an innocuous beverage—coffee. Understanding its true impact on cognitive performance could lead to broader insights about lifestyle choices and their effects on our mental faculties.

Moreover, the application of a null hypothesis spans beyond individual scenarios. Consider how it shapes critical public health discussions. Take smoking, for example. The null hypothesis might state: “There is no causal relationship between smoking and lung cancer.” This hypothesis would direct researchers to embark on extensive studies, analyzing data from various demographics, environments, and smoking histories.

Through rigorous testing, scientific inquiry eventually produced conclusive evidence linking smoking to lung cancer. Thus, the null hypothesis was rejected, leading to powerful public health messages that have altered societal behaviors. The deeper reason for this fascination lies in the profound impact such research can have on collective decision-making and policy formation. By dismantling fallacies, science cultivates a more informed populace, capable of making choices founded on empirical evidence rather than conjecture.

Another captivating dimension of the null hypothesis emerges when considering its role in psychological research. For instance, imagine a study examining whether high levels of stress adversely affect test scores among students. The null hypothesis could hypothesize: “Stress levels do not influence test scores.” This investigation could draw in participants from various demographics, measuring their self-reported stress and correlating it with exam performance.

The results could unveil intricate relationships—perhaps revealing underlying factors that contribute to stress, such as socioeconomic background or access to resources. This exploration unearths a fertile ground for inquiry, prompting further questions about educational equity and mental health support systems. When researchers engage with the null hypothesis, they inevitably touch upon societal issues that reverberate beyond academia, igniting dialogues about systemic reform and individual well-being.

In summary, the null hypothesis stands as a critical component of scientific research, compelling scholars to diligently test assumptions against empirical evidence. By formulating claims that challenge prevailing beliefs, researchers cultivate a culture of inquiry, unearthing deeper truths that enrich our understanding of various phenomena. Examples such as coffee consumption or the effects of stress on academic performance highlight the nuances involved in hypothesis testing, showcasing how statistical scrutiny can influence personal behaviors as well as public health policy.

As we navigate a world teeming with information, the allure of the null hypothesis lies not merely in its methodical approach to inquiry but also in its capacity to foster realization and provoke critical thought. It encourages individuals to question instincts and further their knowledge, encouraging an ongoing quest for truth. The rich typography of data and its interpretation offers limitless avenues for exploration and reflection, ultimately binding the scientific community in its relentless pursuit of clarity amidst the complex tapestry of human experience.